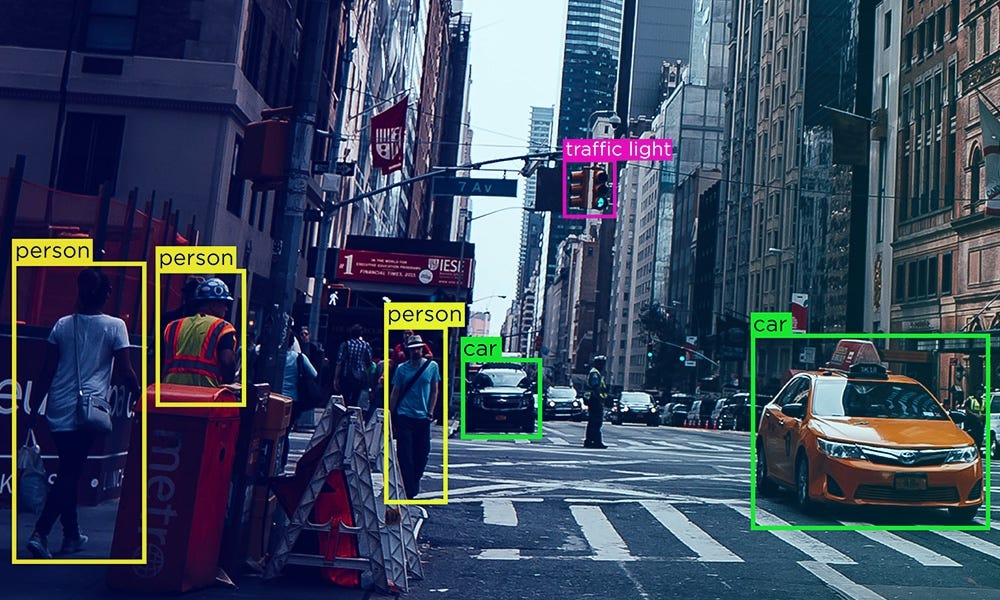

Given our current trajectory, labeling will become the paradigmatic business model and Scale AI the archetypal company.

What happens when >50% of the revenue of WSJ, Yelp, and Reddit come from foundation model companies like OpenAI? Instead of serving human users, they will slowly transition into labeling companies. Reviews data as a service, Journalism data as a service, Discourse data as a service.

The current business model dynamics in AI will result in a tragedy of the commons where the long-tail of human-generated data will shrivel away - as a travel blogger, there is no incentive for me to continue blogging on Wordpress when Perplexity summarizes my experiences and directs traffic away from my blog.

A vast majority of the content on the internet is not driven by social, not financial motives. However, acquiring social status/recognition requires distribution, and AI is going to suck up that distribution by providing one-shot answers on one platform. Therefore, there is no incentive to continue producing novel content into the void.

Yelp, Reddit, and other UGC platforms are essentially trading organic users for what short-term revenue they can get as their content machine dies out. When Redditors stop posting organically as traffic shifts to AI summarization tools, Reddit needs to pay professional "labelers" to generate realistic human content. Reddit becomes a "labeling" company, and its customer transitions from the user to OpenAI. The same will happen to the WSJ as their revenues shift from subscriptions to contracts with foundation model companies.

In order to survive, these companies either need to:

Maintain strong retention in the face of competition from foundation models

Pay professional labelers to review restaurants, create journalism that seems realistic and passionate, etc.

Compensate users via some revenue-sharing mechanism for participation.

(1) seems difficult, if you believe that AI search businesses should be valuable at all, it is because they will absorb eyeballs from existing platforms. Some will survive, most will not.

(2) is the Scale-ification thesis, and appears dreary. Scale AI is an incredible business and I admire both the company and founder, but I'd hope that Yelp/WSJ don't adopt the same business model for social surplus reasons.

(3) translates into an incentive coordination problem, and this is the most interesting version of the internet!

I am fascinated by networked technologies exploring this sustainable vision for an open, organic internet. Ultimately, this requires a new business model that coordinates incentives to create from the bottom up, starting from individual creators, rather than from the top down via enterprise contracts. Whether this model is better for the internet is undeniable; whether it is more profitable is an open and critical question.

The space of creative monetization in the age of social media and generative AI — in an era where the technical cost of distribution and substantiation are approaching zero — is one of the truly open areas left in AI right now. We know models will get faster, cheaper, and more powerful eventually, but we do not know what an internet will look like in that world.

This is a good argument for social networks to not offer APIs, encourage paywalls, and block scrapers.

Not too radical of an idea, given that we do this with books

The complexity of the data labelling process may steadily increase as easier mental constructions continue to be labelled (abstracted) and labels are distributed. Maybe intelligence can be faked with sufficient data and large enough neural networks to the point where we can't say it is fake intelligence.